When OpenAI published ChatGPT, I was intrigued and eager to explore its capabilities. Initially based on GPT-3, it represented a significant technological advancement, and I was excited about the prospect of developing applications with it. Years ago, I attempted to create something akin to Copilot using different technologies, but the result was a slow and rudimentary system. With GPT-3, I was thrilled by the myriad possibilities it offered.

Upon gaining access to OpenAI's API (which wasn't fully public at the time), I began developing my own applications using GPT. I explored various libraries, including LangChain. While it was useful for researching code and examining prompt examples, I found the library itself lacking in quality and control. By control, I mean the ability to track the cost of each API request and minimize both the number of requests and the prompt size. Providing concise data with necessary context can yield better responses. Although OpenAI has periodically reduced API costs and released new GPT versions, the issues I encountered may not be as pronounced now. Nevertheless, I believe that LangChain and similar libraries are not suitable for production environments. The OpenAI API is straightforward to use, and for production systems, you often need full control over your software's operations.

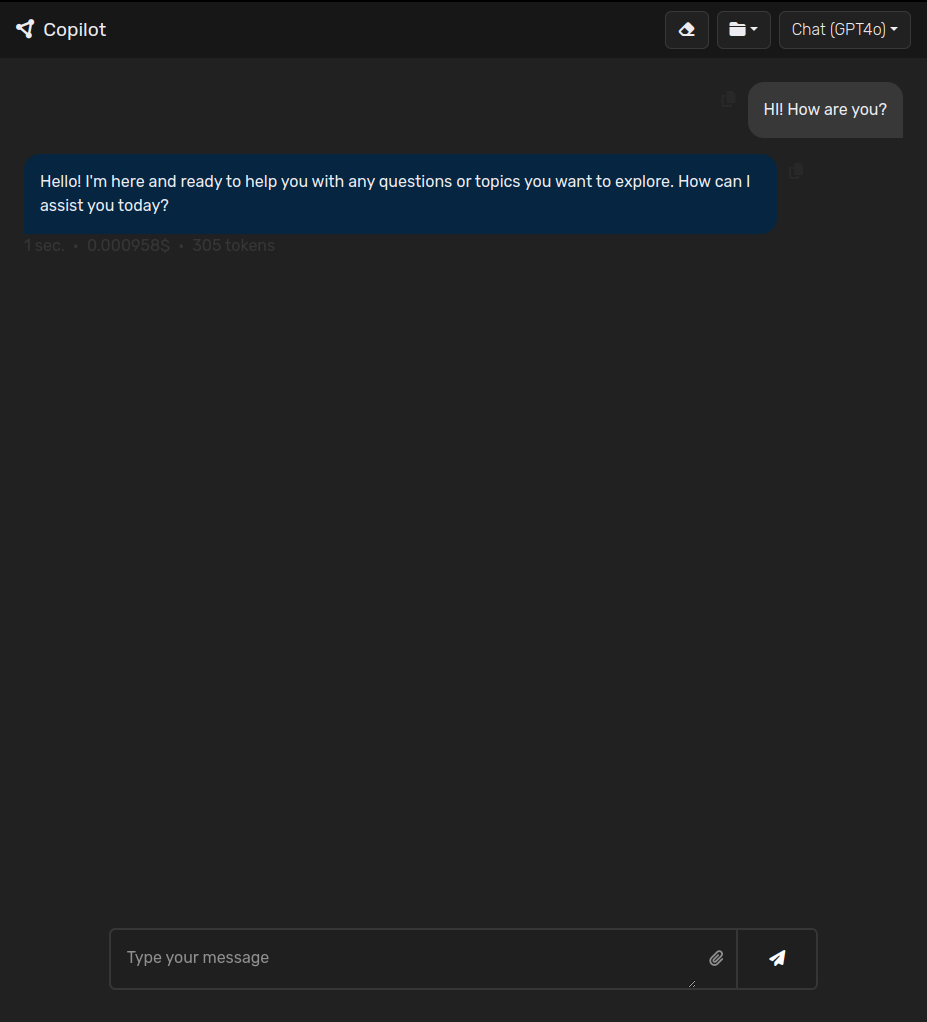

Returning to my journey with OpenAI, I eventually started using the official OpenAI Python library. I developed a few small scripts and then began creating my own implementation of ChatGPT (it's important to note that ChatGPT is an application that uses GPT).

I've conducted various experiments with my chat application, adding and removing features as needed. For example, during the initial development, I implemented:

-

Different "profiles": Each profile has its own initial prompt, which helps direct conversations and provide initial context. This eliminates the need to repeat information. For instance, one profile includes details about me, my hobbies, and the technologies I use, leading to more relevant responses with less input. While this is now a common feature in different implementations, it was quite a standout feature at the time.

-

Prepared prompt templates: These templates expedite regular tasks. For example, after interviewing candidates, I use a template to format feedback. I jot down notes during the interview, paste them into the prompt template, and receive almost-ready feedback within seconds, requiring only minor adjustments.

-

Speech recognition: Although I removed this feature as it wasn't useful for me at the time, I might revisit it in the future.

-

"Smart" copilot: I worked on my own Copilot implementation with an architecture capable of executing commands to handle more cases, such as retrieving information from Google search results or calling other ML models. Although development is currently on hold due to limited benefits for me, integrating it with my home automation system and speech recognition could be valuable in the future.

-

News aggregator: I added reading of news from my Telegram account. Eventually, I can open chat "News Reader," and it will start reading news from Telegram and aggregate it to provide a short report with links if I want to read it in detail. I also implemented a feature for some chats to extract useful information from hundreds of messages that I can't read because I don't have enough time.

- Etc.

In conclusion, my journey with OpenAI's GPT has been both challenging and rewarding. It has allowed me to experiment with cutting-edge technology and explore new possibilities in application development. And this is not the end of the experiments 🙂